A recurrent nerve ( RNN ) is a class of artificial neural networks where connections between nodes form a directed graph along the sequence. This allows it to show dynamic temporal behavior for a time sequence. Unlike feedforward nerve networks, RNN can use its internal state (memory) to process input sequences. This makes them applicable to tasks such as unregistered handwriting recognition, connecting, or speech recognition.

The term "recurrent neural network" is used indiscriminately to refer to two broad classes of tissues with the same general structure, where one is a limited boost and the other is an infinite impulse. Both network classes show temporal dynamic behavior. A repeatable network of restricted impulses is a directed acyclic graph that can be removed and replaced with a tight feedforward nerve network, while the repeatable network of infinite impulses is a directed cyclic graph that can not be opened.

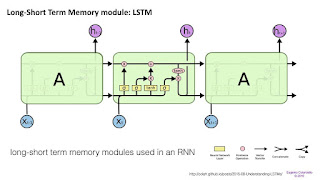

Both the impulse is limited and the network of impulse impulse infinite can have additional storage status, and the storage can be under direct control by neural network. Storage can also be replaced by other networks or graphs, if it includes time delays or has feedback loops. Such a controlled state is called a gated state or locked memory, and is part of long-term memory (LSTMs) and a repeating unit that is maintained.

Video Recurrent neural network

History

Recurrent neural networks were developed in the 1980s. The Hopfield Network was created by John Hopfield in 1982. In 1993, the nervous history compressor system accomplished the task of "Deeply Deep Learning" which required more than 1000 subsequent layers in the RNN that opened in time.

LSTM

Short-term memory (LSTM) networks were created by Hochreiter and Schmidhuber in 1997 and set accuracy records across multiple application domains.

Around 2007, LSTM began to revolutionize speech recognition, defeating traditional models in particular speech applications. In 2009, the Temporal Interconnection LSTM network (CTC) was the first RNN to win a pattern recognition contest when it won several competitions in handwriting recognition. In 2014, Chinese search giant Baidu used CTC-trained RNNs to break the Hub5'00 speech recognition standard without using traditional speech processing methods.

LSTM also enhances the introduction of large words-vocabulary and text-to-speech synthesis and is used on Google Android. In 2015, Google's speech recognition reported a dramatic jump in performance by 49% through CTC-trained LSTM, used by Google voice search.

LSTM broke records for better machine translation, Language Modeling, and Multilingual Language Processing. LSTM combined with convolutional neural networks (CNNs) enhances automatic image captioning.

Maps Recurrent neural network

Architecture

RNN comes in many variants.

Completely repetitive

Basic RNNs are neuron-like network nodes, each with a direct (one-way) connection to each other node. Each node (neuron) has a real time-varying activation value. Each connection (synapse) has a real value that can be modified. A node is an input node (receiving data from outside the network), output node (generating result), or hidden node (which modifies data on a route from input to output).

For supervised learning in discrete time settings, the sequence of real-valued input vectors arrives at the input node, one vector at a time. At each time step, each non-input unit computes the current activation (result) as a nonlinear function of the weighted sum of the activation of all units connected to it. Target activation given by the supervisor can be assigned to several units of output at a certain time step. For example, if the input sequence is a speech signal corresponding to a spoken digit, the final target output at the end of the sequence may be a label that classifies a digit.

In the setting of reinforcement learning, no teacher gives a target signal. In contrast, the fitness function or reward function is sometimes used to evaluate the performance of RNN, which influences its input flow through output units connected to actuators affecting the environment. It may be used to play a game where progress is measured by the number of points won.

Each sequence generates an error as the sum of deviations from all the target signals from the associated activation calculated by the network. For training sequences of multiple sequences, the total error is the number of errors of all individual sequences.

Repeats independently (IndRNN)

Independent recurrent neural networks (IndRNN) solve the problem of gradient disappearance and explode in a traditionally connected RNN. Each neuron in one layer accepts its own past conditions only as context information and thus independent of each other. Backpropagation gradients can be easily set to avoid gradient disappearance and explode while maintaining long-term memory as well as memory from various range. Cross-neuron information is explored in the next layer. IndRNN can be trained well with non-saturated popular nonlinear functions such as ReLU. It can also learn a very long dependency and build a very deep network.

Recursive

Recursive nerve networks are made by applying the same weighting recursively over similarly differentiated graph structures by traversing structures within the topological order. Such networks are usually also trained by the opposite mode of automatic differentiation. They can process representations of distributed structures, such as logical terms. A special case of recursive nerve tissue is an RNN whose structure corresponds to a linear chain. Recursive nerve tissue has been applied to natural language processing. The Recursive Neural Tensor Network uses a tensor-based composition function for all nodes in the tree.

Hopfield

The Hopfield Network is a RNN where all connections are symmetrical. This requires stationary input and thus is not a general RNN, as it does not process the sequence of patterns. This ensures that he will meet. If the connection is trained using Hebrew language learning then the Hopfield network can serve as a strongly accessible content memory, resistant to connection changes.

Bidirectional associative memory

Introduced by Bart Kosko, the associative two-way memory (BAM) network is a variant of the Hopfield network that stores associative data as a vector. Bi-directionality comes from passing information through its matrix and transpose. Typically, bipolar coding is preferred for binary encoding of associative pairs. More recently, the stochastic BAM model using Markov stepped up is optimized to improve network stability and relevance to real-world applications.

The BAM network has two layers, one of which can be driven as an input to recall an association and produce output on another layer.

Elman and Jordan network

The Elman network is a three-layer network (arranged horizontally like x , y , and z in illustration) with the addition of a set of "context units" ( u in the illustration). The middle layer (hidden) is connected to these context units with a weight of one. At each time step, input is put forward and the learning rules are applied. The back-links keep a copy of the preceding values ​​of the hidden units in the context units (since they spread through connections before the learning rules are applied). Thus the network can maintain some sort of state, allowing it to perform tasks such as sequence predictions that are beyond the power of a standard multilayer perceptron.

Jordan's network is similar to Elman's network. The context unit is fed from the output layer instead of the hidden layer. The context unit in the Jordan network is also referred to as the state layer. They have recurring connections for themselves.

Variabel dan fungsi

- : vektor masukan

- : vektor layer tersembunyi

- : vektor output

- , dan : matriks dan vektor parameter

- dan : Fungsi aktivasi

Kondisi Echo

Network state echo (ESN) has a rarely hidden random hidden layer. The output weight of the neuron is the only part of the tissue that can be changed (trained). ESN is good at reproducing time series. The variant for spiking neurons is known as a liquid state machine.

Compressor history history

The neural history compressor is an unsupervised RNN pile. At the input level, it learns to predict the next input from the previous input. Only the unexpected input of some RNN in the hierarchy becomes input to the next higher RNN level, which therefore rarely recalculates the internal state. Each higher level of RNN thus studies the compressed representation of the information in the RNN below. This is done in such a way that the input sequence can be precisely reconstructed from the representation at the highest level.

This system effectively minimizes the length of the description or the negative logarithm of the probability of the data. Given the many predictabilities that can be learned in the incoming data sequence, the highest level of RNN can use supervised learning to easily classify even in sequences with long intervals between important events.

It is possible to filter the RNN hierarchy into two RNNs: "conscious" (higher level) chunker and "subconscious" automatizer (lower level). After the chunker learns to predict and compress the inputs that the automatizer can not predict, the automatizer can be forced in the next learning phase to predict or replicate the hidden unit additional units of the slower-changing chunker. This makes it easy for the automatizer to learn the appropriate memory and rarely changes in long intervals. This in turn helps the automatizer to create many unpredictable inputs before, so the chunker can focus on the remaining unexpected events.

A partially generative model overcoming gradient problems vanished automatic differentiation or backpropagation in neural networks in 1992. In 1993, such a system solved the task of "Very Deep Learning" which required more than 1000 subsequent layers in the RNN folded in time.

Long-term memory

Long-term long memory (LSTM) is an in-depth learning system that avoids gradient disappears. LSTM is usually coupled with repetitive gates called "forgotten" gates. LSTM prevents backpropagated errors from disappearing or exploding. Conversely, errors can flow backward through an infinite number of virtual layers in space. That is, LSTM can learn tasks that require memory events that occur thousands or even millions of steps discrete time earlier. Topics-specific such as LSTM topologies can evolve. LSTM works even given long delays between important events and can handle signals that mix low and high frequency components.

Many applications use LSTM RNNs stacks and train them with Standardization Temporal Classification (CTC) to find RNN weight matrices that maximize the probability of sequence of labels in a set of training, given the appropriate input sequence. CTC achieves smoothing and recognition.

LSTM can learn to recognize context sensitive language unlike previous models based on hidden Markov model (HMM) and similar concepts.

second order RNN

RNN urutan kedua menggunakan bobot urutan yang lebih tinggi sebagai ganti bobot, dan masukan dan status dapat menjadi produk. Ini memungkinkan pemetaan langsung ke mesin negara yang terbatas baik dalam pelatihan, stabilitas, dan representasi. Memori jangka pendek yang panjang adalah contoh dari ini tetapi tidak memiliki pemetaan formal atau bukti stabilitas.

Gated recurrent unit

Gated recurrent units (GRUs) are the gating mechanisms of recurrent nerve tissue introduced in 2014. They are used in full form and some simplified variants. Their performance on polyphonic music modeling and sound signal modeling is found to be similar to long-term memory. They have fewer parameters than LSTM, because they have no output gates.

Bi-directional

Bi-directional RNNs use finite sequences to predict or label each element of a sequence based on the context of the past and future elements. This is done by combining the output of two RNNs, one processing sequences from left to right, the other from right to left. The combined output is the prediction of the target signal given by the teacher. This technique proved very useful when combined with LSTM RNNs.

Ongoing time

A repetitive neural network (CTRNN) uses a system of ordinary differential equations to model the effects on neurons from incoming spacecraft.

Untuk neuron dalam jaringan dengan potensial aksi , tingkat perubahan aktivasi diberikan oleh:

CTRNN has been applied to evolutionary robotics where they have been used to overcome vision, cooperation, and minimal cognitive behavior.

Notice that, with Shannon's sampling theorem, discrete recurrent nervous tissue can be seen as a continuous-time recurrent nervous network where differential equations have changed into equivalent equivalence equations. This transformation can be considered as occurring after the post-synaptic node activation function. Â Â Hierarchical

Hierarchical RNNs connect their neurons in various ways to decipher hierarchical behavior into useful subprograms.

Repeating multilayer perceptron

Generally, the Repeat Multi-Layer Perceptron (RMLP) network consists of tiered subnets, each containing multiple node layers. Each of these subnets is a forward feed except for the last layer, which can have a feedback connection. Each of these subnets is connected only with advanced feed connections.

Some time scale models

Several times the scale of recurrent neural networks (MTRNN) is a nerve-based computational model that can simulate a functional hierarchy of the brain through self-regulation that depends on the spatial relationship between neurons and on different types of neuronal activity, each with different time properties. With varied neuronal activity, the continuous sequence of a series of segmented behaviors becomes reusable primitives, which in turn are flexibly integrated into diverse sequential behaviors. The biological approval of such hierarchy types is discussed in the theory of memory function prediction by Hawkins in his book On Intelligence.

Neural Turing Machine

Neural Turing machines (NTMs) are methods to extend recurrent nerve networks by combining them into external memory resources that can interact with attention processes. This combined system is analogous to the Turing engine or the Von Neumann architecture but can be differentiated from end to end, allowing it to be efficiently trained with gradient descent.

Distinguishable neural computers

Differentiable neural computers (DNCs) are an extension of the Neural Turing engine, allowing the use of fuzzy numbers of each memory address and chronological record.

Neural network pushdown automata

Neural network pushdown automata (NNPDA) is similar to NTM, but the tapes are replaced by differentiated and trained analog stacks. In this way, they have the same complexity as the identifiers of context free grammars (CFGs).

Training

Gradient descent

Gradient descent is the first-order iterative optimization algorithm to find the minimum function. In a neural network, it can be used to minimize the error term by altering each weight in proportion to the error derivative with respect to the weight, provided that the nonlinear activation function can be differentiated. The various methods to do so were developed in the 1980s and early 1990s by Werbos, Williams, Robinson, Schmidhuber, Hochreiter, Pearlmutter and others.

The standard method is called "backpropagation through time" or BPTT, and is a back-propagation generalization for feed-forward networks. Like that method, it is an example of automatic differentiation in the inverse accumulation mode of the Pontryagin minimum principle. A more computationally expensive online variable is called "Real-Time Recurrent Learning" or RTRL, which is a derivative of automatic differentiation in forward accumulated mode with stacked tangent vectors. Unlike BPTT, this algorithm is local in time but not local in space.

In this context, local in space means that the unit weight vector of a unit can be updated only using information stored in the connected unit and the unit itself so that the renewal complexity of a unit is linear in the weighted vector dimension. Local time means on-line updates and depends only on the latest time step rather than at some time step within the specified timeframe as in the CPMC. Biological neural networks appear to be local with respect to time and space.

For recursive recursive partial derivatives, the RTRL has the time complexity O (the number of x hidden weights) per time step to calculate the Jacobian matrix, while the BPTT takes only O (weighted amount) per time step, with the cost of storing all future activations within a given time period. An online hybrid between BPTT and RTRL with medium complexity exists, along with a variant for sustained time.

A major problem with the slope of gradients for standard RNN architectures is that the gradient errors disappear exponentially quickly with the size of the time lag between important events. LSTM combined with hybrid learning methods BPTT/RTRL tries to overcome this problem. This problem is also solved in an independent neural network (IndRNN) by reducing the neuron context to its own in the past and cross-neuron information can then be explored in the following layers. Memories of various ranges including long-term memory can be learned without problems disappearing and exploding gradients.

The on-line algorithm is called recursive backpropagation (CRBP), implements and combines the paradigm of BPTT and RTRL for local repeating networks. It works with the most common local recurring network. CRBP algorithm can minimize global error term. This fact improves the stability of the algorithm, providing a unifying view of the gradient calculation technique for recurrent networking with local feedback.

One approach to gradient information calculations in RNNs with arbitrary architectures is based on the flow diagram's derivation diagram. It uses the BPTT batch algorithm, based on Lee's theorem for network sensitivity calculations. It was proposed by Wan and Beaufays, while the online version was quickly proposed by Campolucci, Uncini and Piazza.

Global optimization methods

Weight training in neural networks can be modeled as a non-linear global optimization problem. A target function can be established to evaluate the fitness or error of a particular weight vector as follows: First, the weights in the network are arranged according to the weight vector. Furthermore, the network is evaluated against the training sequence. Typically, the quadratic sum-difference between the predicted and the target value specified in the training sequence is used to represent the current weight vector error. An arbitrary global optimization technique can be used to minimize this target function.

The most common global optimization method for training RNN is the genetic algorithm, especially in unstructured networks.

Initially, the genetic algorithm is encoded with the weight of the neural network in a predetermined manner in which one gene in a chromosome represents one heavy bond. The entire network is represented as a single chromosome. The fitness function is evaluated as follows:

- Any weights encoded in chromosomes are assigned to each network weight link.

- Training tools are presented to networks that propagate input signals forward.

- The square-squared error is returned to the fitness function.

- This function encourages the genetic selection process.

Many chromosomes make up the population; therefore, many different neural networks evolve until the stopping criterion is met. Common dismissal schemes are:

- When neural networks have learned a certain percentage of training data or

- When the minimum value of the mean-squared-error is met or

- When the maximum number of training generations has been achieved.

Cessation criteria are evaluated by the fitness function because it gets the opposite of the mean-squared error of each network during the training. Therefore, the goal of genetic algorithms is to maximize fitness function, reducing the mean-squared error.

Other global optimization (and/or evolution) techniques can be used to find good weights, such as annealing simulations or swarm particles optimization.

Related fields and models

RNN can behave chaotic. In such cases, dynamic system theory can be used for analysis.

They are actually recursive nerve tissue with a particular structure: linear chain. While the recursive nerve network operates on any hierarchical structure, combining the representation of the child into the parent representation, the recurring neural network operates on a linear time development, combining the preceding time step and the hidden representation into the representation for the current time step.

Specifically, RNNs can appear as nonlinear versions of limited impulse responses and infinite impulse response filters and as well as nonlinear autoregressive exporter model (NARX) models.

Library

- pytorch: Tensor and dynamic neural networks in Python with strong GPU acceleration.

- Apache Lions

- Caffe: Created by the Berkeley Vision and Learning Center (BVLC). It supports CPU and GPU. Developed in C, and has a Python and MATLAB wrapper.

- Deeplearning4j: In-depth study on Java and Scala on multi-GPU-enabled Spark. Learning libraries are general-purpose for JVM production stacks that run on scientific computing machines C. Allows creation of custom layers. Integrated with Hadoop and Kafka.

- Dynet: Dynamic Nerve Network Toolkit.

- Hard

- Microsoft Cognitive Toolkit

- TensorFlow: Similar libraries-The Apache 2.0 licensed with support for Google's CPU, GPU, and TPU, phones

- Theano: References in-depth study libraries for Python with APIs are mostly compatible with popular NumPy libraries. Allows users to write symbolic mathematical expressions, then automatically generate their derivatives, saving users from having to code the gradient or backpropagation. This expression symbol is automatically compiled into CUDA code for quick implementation, on-the-GPU.

- Torch (www.torch.ch): A scientific computing framework with broad support for machine learning algorithms, written in C and lua. The lead author is Ronan Collobert, and is now used on Facebook AI Research and Twitter.

- MXNet: a modern open source open source framework used to train and deploy deep neural networks.

Apps

Artificial Neural Network Applications include:

- Robot control

- Predicted time series

- Speech recognition

- Rhythm learning

- Music composition

- Grammar learning

- Handwriting recognition

- Introduction to human action

- Detection of Protein Homology

- Predict subclass protein localization

- Some prediction tasks in the business process management field

- Prediction in medical care path

References

External links

- CRF RNNSharp based on repeated neural networks (C #,.NET)

- Repeated Neural Networks with more than 60 RNN papers by the JÃÆ'¼rgen Schmidhuber group at Dalle Molle Institute for Artificial Intelligence Research

- Implementation of Elman's Neural Network for WEKA

- Neural Nets Repeat & amp; LSTMs in Java

- alternate experiment to complete RNN/Reward driven

Source of the article : Wikipedia